Juggling Crises: Using AI to Support Clients and Clinicians

Sometimes you land in a particular professional field because it’s the only place you can imagine yourself. There’s passion and intensity for this particular vocation over that one.

And sometimes you land there because you’re good at math. At least, that’s how Research

Assistant Professor Meghan Broadbent came to social work.

And sometimes you land there because you’re good at math. At least, that’s how Research

Assistant Professor Meghan Broadbent came to social work.

Her multidisciplinary research occupies a space where program evaluation, data science, and human services intersect. Within that, her work has covered researching emergency dispatch protocols, evaluating the efficacy of family support programs, and provider service planning for mental healthcare clinicians.

Her latest research project is her doctoral dissertation that looks at the integration of artificial intelligence (AI) and machine learning tools to assist crisis workers in both identifying client risk of suicide and managing caseloads.

Suicidal ideation is on the rise across the country. Nearly a quarter of all youth in the United States experience suicidal ideation every year, and it’s the second leading cause of death among Americans aged 10-34. Closer to home, it’s the number one cause of death for Utahns in that age demographic.

There are a variety of means that people can use to seek professional help, one of which is text-based crisis lines. Most people already have a means of using text-based messaging, so this method of support is particularly accessible and inexpensive for people. Noting the increase in participation, Prof. Broadbent—who serves as associate director of the College of Social Work’s Social Research Institute—had a variety of questions; how do we assess quality of care? Ensure fidelity of care? Provide adequate training and support for crisis line professionals? Protect counselors who are in short supply and at risk of burnout?

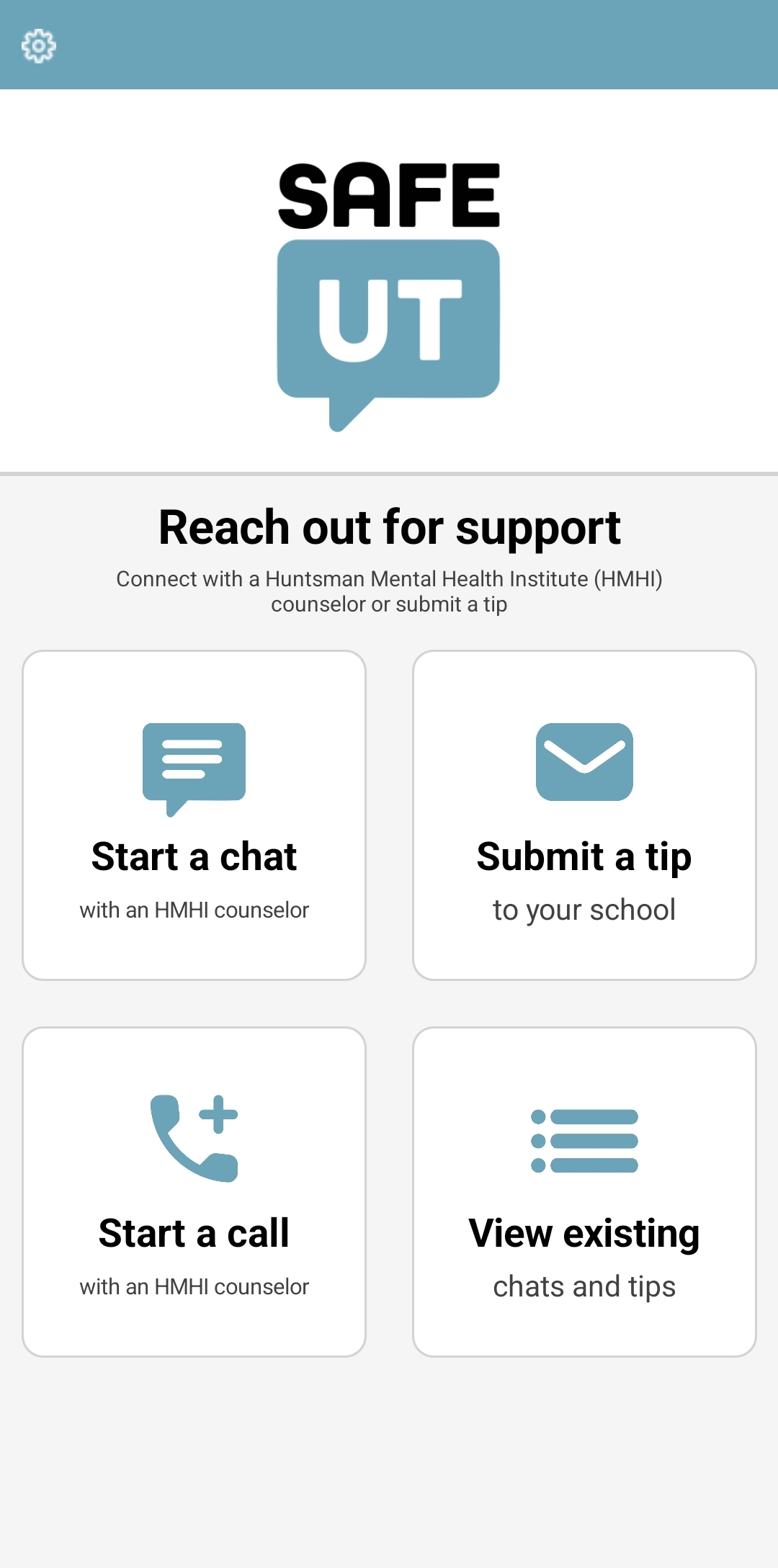

Leading an interdisciplinary team that included peers from educational and clinical psychology, mental health professionals, and computer science, Prof. Broadbent set out to find some answers. She decided to study SafeUT—a Utah-based counseling app. Though it started as an anonymous tip line for K-12 student, it was later adopted for wider counseling use and broadened to include people of all ages. When using the app, Utahns are connected within seconds to a licensed (or license-eligible) clinician, most of whom are social workers. People can use the app to submit tips concerning family members and friends, or to seek support for themselves with something they’re struggling with—whether that be bullying, difficulty handling the pressures of school, or anything else affecting mental health and wellbeing.

Prof. Broadbent’s team was able to analyze four years of data, which included over

80,000 chats, two million messages, 90 counselors, and 33,000 clients. Her research

focused on two main questions: Can we train an AI model to help a counselor detect

risk of suicide among clients who text in to the service? And, can we build a model

to help counselors manage their caseloads?

Prof. Broadbent’s team was able to analyze four years of data, which included over

80,000 chats, two million messages, 90 counselors, and 33,000 clients. Her research

focused on two main questions: Can we train an AI model to help a counselor detect

risk of suicide among clients who text in to the service? And, can we build a model

to help counselors manage their caseloads?

To study this, first the team built a natural language process model that separated every word typed by a client or a clinician and assigned a numerical value to that word. This, in turn, attached a numeric calculation to each phrase, through which the model could predict the topic of a message. Once the team helped the model flag which words, statements, and phrases exhibit a risk of suicide or suicidal ideation, over time, the model was able to identify new messages that exhibited a high risk of suicide.

The evaluations made by the AI model were compared with notes the counselors made indicating the level of risk in a chat to verify accuracy. The research team ran these numbers through multiple different statistical tests. They found that through repetitive tests, the model came out with an average model accuracy of about 90%. In other words, the AI model correctly detected the level of suicide risk about 90% of the time.

Part of this accuracy is thanks to the kind of data they’re working with. “When people are running this kind of research, they’re usually looking at chat level data from notes created afterward—summary notes a therapist makes after an appointment.,” explained Prof. Broadbent. “But here, we’re going down to the message level. We have full, perfect transcripts that require no interpretation.” Prof. Broadbent is excited about the training implications here. “We know that the level of risk fluctuates in these conversations, and with this precise, rich data, we’re able to map those changes—to see real reactions to different types of therapy.”

As Prof. Broadbent dug in to the numbers, she couldn’t help but notice the times when a counselor didn’t log an assessment of the chat; she wanted to understand more of what was happening for those counselors.

In a therapy appointment in an office setting, there is one client, one counselor, and one conversation that takes 30-50 minutes. The amount of time per client varies significantly for a crisis phone-in hotlines, but the numbers are similar: one client, one counselor, and one conversation. But for a text-based crisis chat line? It’s one client and one counselor … potentially times ten.

Using the data available from SafeUT records, Prof. Broadbent and her team were able to map out the chat caseloads of individual counselors over the course of their shifts. Looking at data in ten-minute increments, she found that counselors usually were engaged in the three to five chats in a 10-minute window—though the upper limit was 16. Keep in mind, this is a crisis support line for high-risk clients in potentially life-threatening circumstances.

“Typically, when we talk about case load, we measure that on a scale of days, weeks, months,” said Prof. Broadbent. “But for text-based crisis counselors, we’re talking about case load by the minute.” This high cognitive burden can impair a counselor’s linguistic functioning, raise negative emotions, and increase risk of burnout. With the data Prof. Broadbent and her team have collected so far, they intend to develop a cognitive load index to identify maximum caseloads for this important therapeutic delivery method.

“This is vital treatment,” said Prof. Broadbent. “We’re talking about critical, life-saving care. These are new systems and it takes time to understand how they are functioning and where adjustments are needed. As we understand more of what it means to provide mental healthcare through a digital platform, we need to be intentional about setting up a system that allows for client and therapist success.”